Sibo Zhu (朱思博)

sibozhu AT mit.edu

Massachusetts Institute of Technology

Welcome to my personal website!

My name is Sibo Zhu, and I am currently a research assistant at MIT Department of Electrical Engineering and Computer Science (EECS). I am excited to be working with Prof. Song Han in the HAN (Hardware, Accelerators, and Neural Networks) Lab on robotic perception and efficient deep learning.

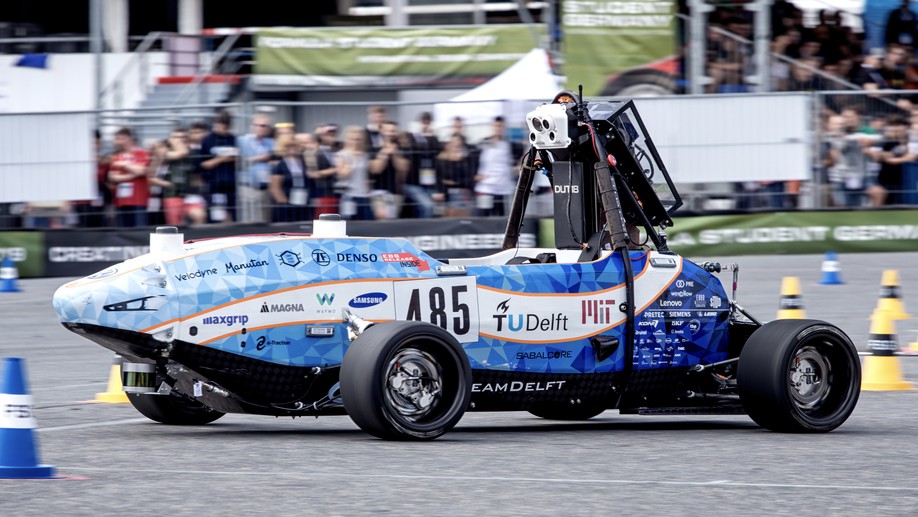

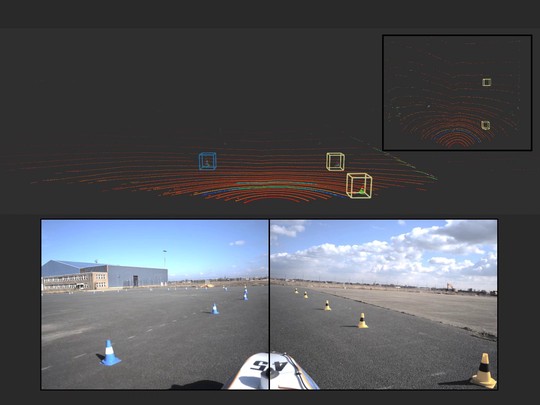

I am also the perception lead at MIT Driverless, a student-led high-speed autonomous racing team, developing full scale vehicles and autonomous software to compete in driverless racing competitions.

Before coming to MIT, I received my M.S. in Computer Science from Brandeis University. During my masters, I was fortunate to work with Prof. Hongfu Liu. Prior to that, I received my B.A. in Computer Science and B.A. in Pure & Applied Mathematics from Boston University, worked closely with Prof. Sang (“Peter”) Chin.

Outside of research, I enjoy snowboarding, skydiving, running, hiking, working out, cooking, movies, music and especially travelling to balance my life from work.

Interests

- Robotics

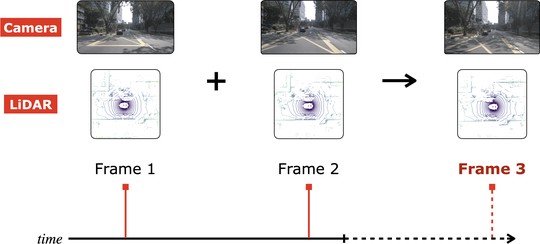

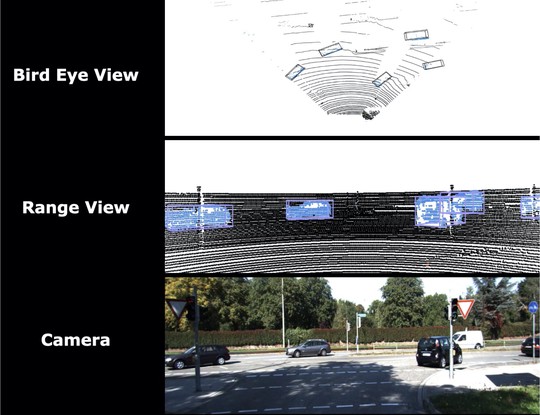

- Perception

- Efficient Deep Learning

- Data Mining

Education

M.S in Computer Science, 2020

Brandeis University

B.A in Computer Science, 2018

Boston University

B.A in Pure & Applied Mathematics, 2018

Boston University